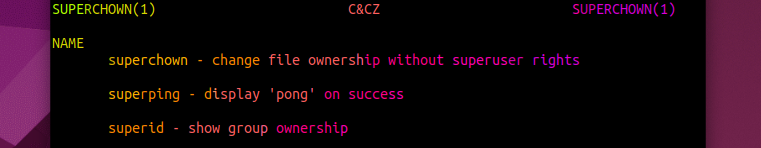

C&CZ is de IT-afdeling voor onderwijs en onderzoek van de Science Faculteit van de Radboud Universiteit.

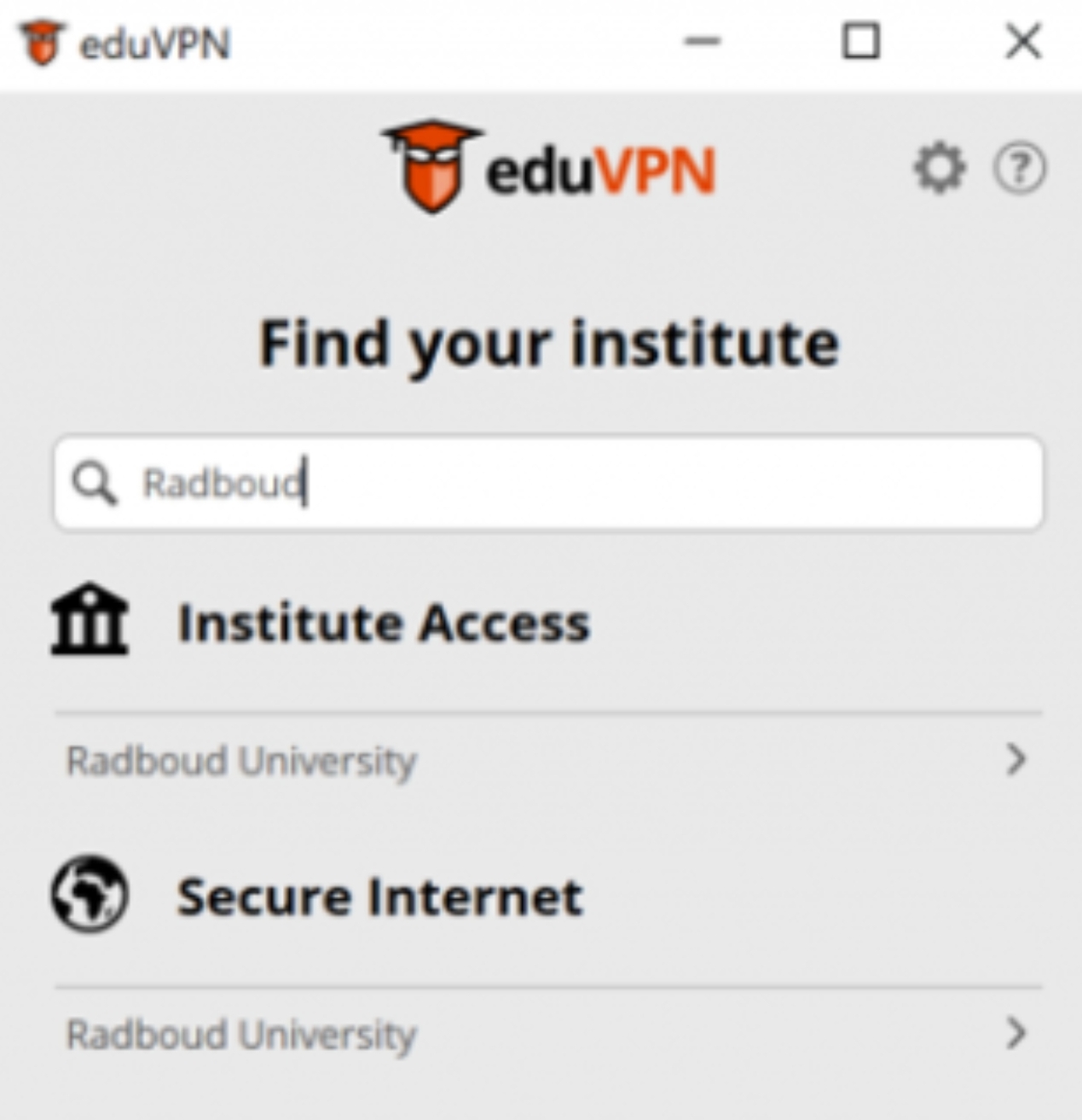

Je bent bij ons aan het juiste adres voor ICT gerelateerde vragen, en in het bijzonder voor vragen over science accounts en science diensten als e-mail, data opslag, gitlab, VPN, ssh en enquetes. En ook voor vragen over laptops, desktops, linux login servers, rekenclusters, software licenties, poster- of 3d-printing en andere IT-gerelateerde vragen. Vragen of meldingen over IT-beveiliging, privacy of datalekken kun je ook bij ons kwijt.

Voor vragen over printen, telefoons, RU accounts, MS365, RU website, netwerk-aansluitingen, neem contact op met de RU helpdesk.